What’s the best way to tackle COVID and climate change? Anthropology, says the woman who predicted the global financial crisis

Financial Times journalist and author Gillian Tett discusses her new book, Anthro-Vision: How Anthropology Can Explain Business and Life.

- 3 February 2022

- 10 min read

- by World Economic Forum

In 2005, Gillian Tett wrote her colleagues at the Financial Times a memo arguing that the paper should devote more time to covering those submerged parts of the ‘Financial Iceberg’, such as credit and derivatives.

“The biggest risks in the world are not usually hidden through any dastardly, James Bond-style plot,” the FT’s US editor-at-large, who predicted the 2007-8 financial crisis, says today.

“The bankers weren’t concocting a wild scheme to bury what they were doing with financial innovation in 2005-6 into some kind of dark tunnel. Most of the problems were actually hidden in plain sight, but ignored because of the cultural patterns."

In her new book, Anthro-Vision, Tett explains how a PhD in anthropology helped her predict the financial crash, because she realized the siloed bankers had tunnel vision which stopped them seeing the wider context and real-world implications of the products they were selling.

From COVID-19 to the climate crisis and artificial intelligence, Tett tells the World Economic Forum Book Club Podcast how taking a worm’s-eye, empathetic view of risks facing the world today can help to explain – and potentially solve – them.

What is the ‘business’ case for anthropology?

Gillian Tett: Humans are all shaped by cultural assumptions that we inherit from our environment. Culture doesn’t exist as boxes, it’s a spectrum of difference. One of the most important things we need to do in a world that’s both globalized and polarized is recognize that the cultural assumptions we each inherit are very powerful, but they’re different. And we can all benefit by trying to immerse ourselves into the lives and minds of others, but also so that we can then flip the lens and look back at ourselves with a lot more clarity.

There’s a wonderful proverb which says: ‘A fish can’t see water’. We can’t see our own cultural assumptions and biases, unless we jump out of our fish bowl, go and ask other fish what they think about us, and then look back at ourselves. When we look at that wider cultural context, we begin to understand why using mere quantitative tools to navigate the world, like corporate balance sheets or economic models, or big data sets, simply isn’t enough to capture the complexity of our cultural experiences today.

Have you read?

In the book, you advocate a more joined-up, interdisciplinary approach to problem-solving...

Gillian: COVID-19 has made it very clear you can’t beat a pandemic just with medical science, or big data or computer science. You need to combine it with social science to understand the cultures and behavioural patterns that shape how people do or don’t behave. And that extends right across all the problems which we’re facing today, such as climate change, income inequality, or both the promise and peril of big data and artificial intelligence. My plea in the book really is to try and start blending these different disciplines.

The good news is there is evidence this is happening. During the Ebola epidemic in West Africa, in 2014, after several months of trying and failing to beat Ebola just through medical science, there was a shift in policy on the part of the World Health Organization and others to embrace more use of behavioural science. That was really what got the Ebola epidemic controlled. A similar process of learning – sadly a slow one – has been happening with COVID-19. When it comes to the vaccination roll-out, there is more appreciation of the need to blend these disciplines. Now we need to apply these same principles to climate change battles, to tech battles and AI. There is some progress, but it’s still very patchy.

How can we use the lessons of anthropology to address climate change?

Gillian: Firstly, we have to understand people’s cultural experiences of climate change issues. In a conversation between [actor] Robert Downey Jr and John Kerry, the US climate envoy, on the World War Zero website, Downey Jr says most people are put off talking about climate because it seems scary and makes them feel guilty. We have to find a way to communicate these messages really effectively to consumers, and be sensitive to what is or is not blocking action by them, just as we’re having to work out why some people today are not taking vaccinations. We can’t assume that what makes sense to an American or European policymaker will make sense to consumers in other parts of the world.

The second way is by looking beyond the edges of your model. For so many years, economists treated the environment as something which was external to their economic models about how the economy was going to behave, so-called ‘externalities’. Corporate finances and business people treated the environment as a footnote to what they were doing in the corporate accounts. They assumed that all of these resources were free, and could just be ignored. Corporate tools like economic models and corporate balance sheets are really useful – but they’re always defined by the limits of what you put into the models, they’re bounded. And we have to learn to look beyond those limits, to get a sense of context. Looking at the environment is absolutely part of the context and is forcing a wider rethink of economics and corporate accounting.

The third way is simple: it’s a human tendency to put others in a box and shun people who seem different, but anthropology argues we can’t do that. We’re all interlinked in a spectrum of cultural difference, in a chain of humanity, and when the weakest link of that chain breaks, we often all suffer. We saw that in COVID, we saw the perils of ignoring what was happening in faraway lands, or pretending you didn’t really know or care what was happening in Wuhan. And climate change is going to see that played out all over again. We cannot afford to ignore other people who seem different from us in a world that’s so tightly integrated as a global system.

Can anthropology be a tool for leaders to overcome unconscious bias at work?

Gillian: Having a diversity of perspectives in any workplace is really, really important: it might take more time to get to an answer, but it’s less likely to be really stupid. One of the reasons the 2008 financial crisis happened was there simply weren’t enough checks and balances in the financial system. Most of the people working in financial companies in the West were all from the same mentality, training, intellect, perspective; they often happened to all be men and were beset with groupthink and tunnel vision. To get some common sense into business leaders who often lack it, you need to have a common view, which goes beyond just one group of people.

Anthropology believes in the value of trying to immerse yourself in the minds of others, to then flip the lens back and look at yourself with fresh clarity. It helps us to think about all the things you ignore in your everyday world, the so-called social silences, the parts of our environment that we tend to overlook because they’re so familiar, or because we’ve labelled them as boring or geeky. Social silences are never irrelevant, they’re often crucially important for explaining how the world really works, and how we reproduce the patterns we have around them for the future. Getting other perspectives helps to uncover social silences.

Have we learned the lessons from the financial crisis?

Gillian: Yes, in some narrow ways: there won’t be another crisis caused by subprime mortgages again and I predict there probably won’t be a crisis caused by a shortage of capital in the regulated banking system again. So that is all very encouraging. But I think there’s still a need to be more imaginative about forward-looking risks, and recognize that threats almost always crop up where there are silos, they’re almost always found where problems fall between the cracks of existing institutions. And when people ignore the importance of cultural patterns, incentives, tribal behaviour and social dynamics.

What do you think are the biggest potential risks facing us in the future?

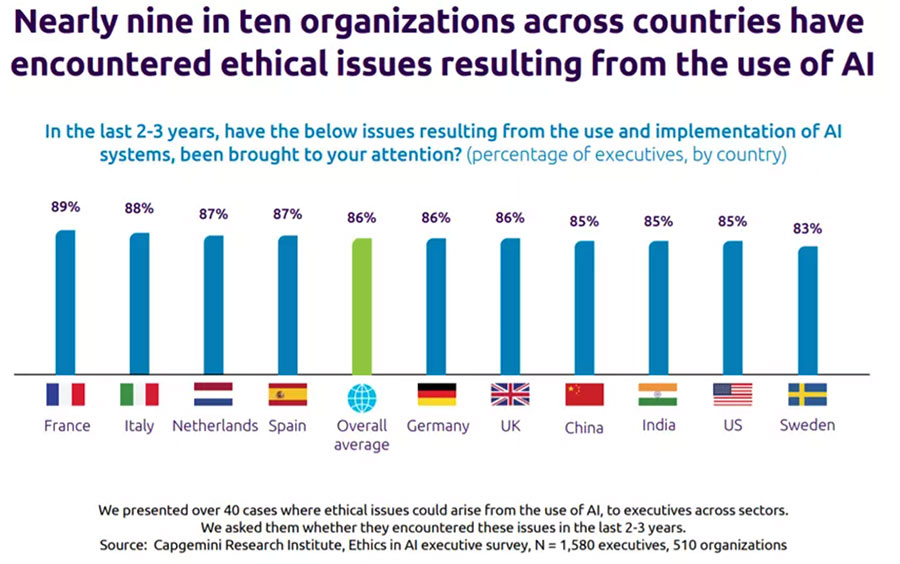

Gillian: We see a similar pattern of intense tribalism, groupthink, tunnel vision having emerged in the tech sector in recent years. That’s worrying. We saw a propensity to ignore social sciences around medicine, in the run up to 2020. I wrote a column a couple of years ago, saying that people were ignoring what was happening inside the geekier corners of medicine, and the risks of pandemics, just as they were ignoring the geekier corners of finance before 2007. We are ignoring today some of the big ethical questions created by AI and finance.

We’re frankly also ignoring many of the issues around AI in general today, because once again, technical knowledge is held in the hands of a tiny group of elite technocrats, who the rest of the world tends to ignore because their activities are labelled as boring and geeky and dull, and therefore not of interest to everybody else.

How can anthropology help us think about AI?

Gillian: We need to understand the context in which AI is being created and implemented and the context of the people, the tribalism of the coders who are writing AI programmes, really matters. We’ve seen the way that you get embedded biases, by the lack of diversity in the coding teams. But we also need to think about the way that AI is implemented and construed in terms of product development, and whether the people who are implementing it have the ability to understand the social and ethical context. Alex Karp, head of Palantir, one of the big tech companies in Silicon Valley, pointed out during the last year’s IPO filings that we’re putting enormous amounts of power in the hands of a small group of computing elite, who operate in silos, and don’t necessarily want that level of power and probably aren’t equipped to deal with it.

Image: Capgemini Research Institute

AI is an amazingly useful tool. But it basically works by amassing vast quantities of data points about our human activity, looking for correlations and then extrapolating into the future. And there are limitations to doing that. If you’re looking for data points about what we do and say, you tend to ignore social silences because what we don’t say by definition doesn’t get recorded. Secondly, correlation is not causation: you can’t understand why people are doing things if you assume that everything can be judged just by looking at correlations and data points. Thirdly, context change, which means that what happened in the recent past doesn’t always reflect what’s going to happen in the future.

Culture is not a simple, clear-cut, linear pattern that can be analyzed with Newtonian physics. It’s an incredibly multi-layered, contradictory, baffling entity that exists in a spectrum of difference, not boxes, and is constantly changing in subtle ways. It’s more like a river.

AI platforms can scan financial markets, look at medical data, design rocket ships and play Go, but no AI platform has ever invented a good joke. Jokes by definition define or articulate social groups, because you have to be inside a group to have shared cultural assumptions to get a joke. And jokes work by playing off all the different layers of our culture, including social silences. AI programmes are useful, but they have limitations and so AI needs a second type of AI, Anthropology Intelligence, to work most effectively in the world today.

- Find all our podcasts here.

- Subscribe: Book Club Podcast, Meet the Leader, Radio Davos.

- Join the World Economic Forum Podcast Club on Facebook.

- You can follow the Book Club on Twitter here or on Instagram here.

Author

Kate Whiting

Senior Writer, Formative Content

Website

This article was first published by the World Economic Forum on 3 February 2022.

More from World Economic Forum

Recommended for you